In my previous blog, I emphasized the dangers of data leakage into the Generative AI (GenAI) clouds and offered a first solution for those developing GenAI applications which is using the more data-privacy-protected version of OpenAI’s GPT4 from Microsoft Azure. While the use of that solution seems a reasonable place to start today, there are also potential benefits in both data protection and cost of operations to training and fine-tuning your own local model using state-of-the-art smaller-model processing that’s right for your business. That said, those models are similar models that have produced the experience you have received with Siri and Alexa over the years – they are good at some things and are not good at many others. The current best practice is that before you take the plunge of private LLM management, first build a prototype using a more sophisticated usage model of GPT4 on Azure (or when available, OpenAI) where you are guaranteed that the prompt is not retained / used for training and you can ge the best of what these rapidly-evolving platforms offer. Presumably, others will soon be shipping similarly-secure models of usage as what Azure is doing and OpenAI is promising. In all cases, maintaining a carefully managed set of proprietary data that you can give access to using the every-improving capabilities of these new LLMs will give you a good moat, and the more your build expertise around the intersection of your ever-grwoing dataset and the capabilities of LLMs, the deeper your moat will grow.

Working with GPT4 In a Domain-Optimized and Data-Secure Architecture

The key thing to understand with using GPT4 is that it is amazing at understanding the meaning of human speech, however garbled, long-winded, or otherwise less-than-optimal that it may be. So within that context, you can get way better results, including conversationally over multiple queries, than you can even by tuning the small language models described below. Here’s the high-level approach to using GPT4 in a data-secure way:

- Read about how Microsoft Semantic Kernel Enables LLM Integration with Conventional Programs

- Read about an alternative approach, Langchain, with this starter article Unlocking the Power of LLMs with LangChain

- Read about vector databases and how they are being used to provide context to LLMs – a good starter article is here

The general approach is as follows:

- You need to first find the intent of the query. You can either do that yourself if the query is reasonably expected to be clear and straightforward, or you have the LLM interpret the client query (or conversational series of queries). You or the LLM ultimately determine the domain of interest (for example, a farmer asking about insecticides for tomatoes, or a customer asking when his order will arrive)

- Armed with the intent, get the contextual info from your vector DB (for example, info on all tomato insecticides or the customer’s order history) and provide that in the prompt.

- Also in the prompt, you “ground” the LLM saying “don’t answer anything about tomatoes that’s not contained in this prompt”. That ensures that the LLM does not answer a farmer in Karnataka India with information about growing tomatoes in Florida USA.

- The context length of GPT-4 is limited to about 8k tokens, or about 6,400 words. There is also a version that can handle up to 32,000 tokens, about 25,000 words, but OpenAI currently limits access. Anthropic recently announced a 100k token Any of these should give you plenty of room for GPT4 to do its thing for you and answer within your constrained context.

With the above model, you’re guaranteed by Microsoft (and OpenAI when they ship their promised data-secure version) that the prompt will not be used to train the LLM for others’ benefit nor shall it convey information from your prompt with anyone. So your data is secure and you get the best of state-of-the-art LLM language comprehension, multi-step conversations, and contextual information provided to your customers.

Training, or more likely Fine-Tuning, Your Own Model

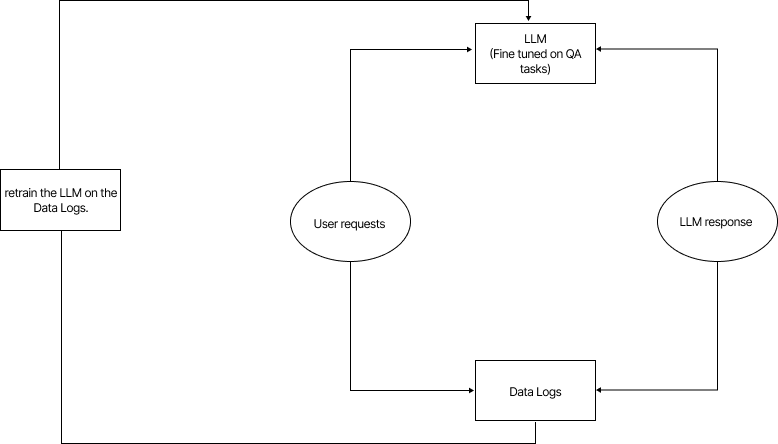

The diagram above represents the most likely way ChatGPT, or any large-scale, free-to-use ML model operates. Over the past years, GenAI systems have been improving in two ways: first, by major architectural overhauls, and second, by consuming massively more data.

Architectural changes are rare and require research breakthroughs. For example, GPT (Generative Pre-Trained Transformer was introduced by Google in a 2017 paper “Attention is All You Need” which presented a new architecture compared to previous models that used LSTM (Long Short-Term Memory – 1995) networks. Architectural advancements are challenging to achieve, although research is constantly conducted by major players in the field. While by January 2023 Google’s paper was cited over 62,000 times making it one of the most cited papers in AI, it still took until OpenAI’s release on November 30 2023 for the advance in the GPT model to break out into a truly transformative solution for the general public.

The more common way to improve new AI models is by using more data, both in the form of original training data and in fine-tuning. Therefore, it’s possible for OpenAI and other organizations to utilize millions of user prompts and questions to “fine-tune” their models. With OpenAI and other tech giants’ scale, one can envision the LLMs getting smarter across every domain through continuous fine-tuning coupled with ever-increasing (and incredibly expensive) training runs. This is clearly not within the grasp of any modest-sized company applying GenAI to their specific needs.

Goal: Achieve Zero Data Leakage with Affordable Operations

One way to both access the latest and greatest in LLMs and ensure a data leakage of zero would be to host an entire LLM on private servers. However, this approach is not feasible due to the significant resource requirements. For instance, ChatGPT v3.5 has approximately 175 billion parameters and occupies around 500 GB of disk space. Making a single forward pass through the model, predicting a single word or token, would require approximately 100 GB of RAM and the requisite CPU and GPU power to plow through those parameters These estimates are conservative and clearly are not practical for operation by anyone with less funding than the tech giants.

To ensure absolute data privacy and eliminate data leakage, hosting one’s own smaller model is another solution beyond using GPT4 on Azure as described above. But the cost associated with hosting one’s own model makes it impractical. How can we overcome this challenge? To understand that, we must first comprehend why ChatGPT requires 175 billion parameters.

Consider writing a book about an organization revolutionized by the early adoption of AI. If you write it in English, it spans 100 pages. Now, if you want Spanish readers to also enjoy it, you rewrite the book in Spanish, adding another 100 pages. The total length becomes 200 pages. However, the Spanish section is irrelevant to English readers and vice versa. This redundancy wastes 100 pages for each user.

ChatGPT and other large language models (LLMs) face similar challenges. While the above example is specific to language, the issue is more general with GPT. These models are task-agnostic, covering every domain of interest available on the web (and more), and providing answers or assistance in countless ways. You don’t need this for your business.

Narrowing the LLM Domain to What Matters to Your Customers

For a specific use case like recommending the best mobile phone to customers, one may not need all the bells and whistles of an LLM like OpenAI’s GPT. You can trim down the model significantly. There is no need for the model to know how many pencils would it take to connect the moon to the Earth when all we want is a phone recommendation. Let’s keep it lean and focused on what really matters—helping customers find their perfect phone without venturing into cosmic artwork. This would be the equivalent of providing English readers, with only the first 100 pages of the book, and for Spanish readers, the last 100.

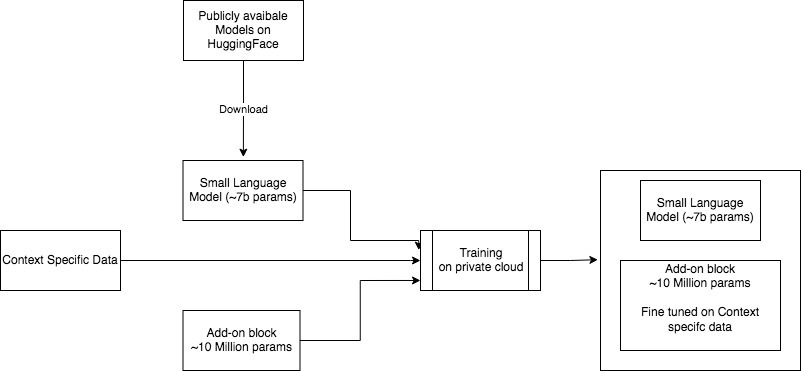

This concept leads us to the idea of re-training and/or fine-tuning smaller LLMs. Using the Huggingface library, you can download LLMs that are a few GBs in size and add custom layers to store context-specific information related to your training data. Starting with and continuously improving your training data would be critical to success. Training such a system can be accomplished in a few hours and can be deployed on systems with just a single GPU (or even a CPU, albeit with slower response times). The system housing this proprietary data and benefiting from the domain-specific continued fine-tuning can be hosted locally, or in a general-purpose secure cloud VM, or by one of the upstart hosting providers specializing in AI-accelerated hosting, like Lambda Labs. When it comes to the training dataset, it is generally preferable to have a larger amount of data, similar to preparing for a test. The more practice questions you attempt, the higher the likelihood of performing well on the actual test. However, as a starting point, try to gather at least 100 to 1000 examples that are specific to your use case. These systems are considered few-shot learners, meaning they can quickly generalize from limited examples. Nonetheless, as you continue to collect more data, it is advisable to consider retraining the model.

Steps to Tune/Train Your Own LLM

At its core, machine learning systems perform a series of non-linear transformations on input data to generate an output that aligns with expectations. In the case of a large language model, the number of transformations required before arriving at an answer is significantly higher, often determined by the number of parameters in the model.

Alternatively, using a smaller model can lead to fewer transformations for reaching a result. However, the relevance of the output to the expected result is weaker when using a smaller model. To address this, an additional block is introduced at the output stage to align the final result more closely with the desired use-case. This is achieved by training these final layers on specific data relevant to the use-case.

However, a common question arises: if the final layers are the key contributors to the desired outcome, why not discard the rest of the model entirely? The reason is that language is a complex phenomenon. While the added layers fine-tune the answers to be relevant to the use-case, it is essential to understand that the intermediate layers play a crucial role in capturing the semantics of sentences, the interactions between words, and the overall meaning of the text. Discarding these intermediate layers would result in a loss of the model’s ability to comprehend language effectively and produce accurate results.

To comprehend the significant impact of adding layers, it is crucial to understand the concept of Transfer Learning. In essence, Transfer Learning refers to a model’s ability to apply knowledge gained from one task to solve another.

For instance, let’s consider a scenario where a person has acquired driving skills for a car. In such a case, this individual would likely grasp how to drive a truck more efficiently and with less training than someone who has never operated any vehicle before

I recommend reading this blog that provides insights into fine-tuning Language Models (LLMs) for a deeper understanding of the topic. You can adapt this Colab notebook to fine-tune an LLM on your custom dataset. Please note that a prerequisite knowledge of Python and data processing is necessary to comprehend the notebook’s contents.

After comprehending the concepts mentioned above and exploring the provided links, the diagram should become much clearer. The process involves downloading an entire model onto your local drive and then adding a few layers (typically determined through experience). You then proceed to train this model using your context-specific data. As a result, the final model comprises both the previously downloaded model and the additional layers that have been fine-tuned to your specific data.

Conclusion

From a rapid prototyping perspective, using GPT4 (or LLaMA or whatever else becomes available and is secure) as described in the introduction is probably the fastest and best way to get great results. As you move towards production, you’ll have more complex considerations around costs, latency, and domain optimization. Re-training and/or fine-tuning your own LLM has advantages but does not give your system access to the fast-moving foundation-level LLMs. Deciding between full custom solutions versus ready-to-use alternatives is always a matter of trade-offs. Understanding and quantifying the advantages and disadvantages is what will set you apart from the rest.

A final few words of wisdom: We all can see that the field of foundational models and LLMs is changing at such a fast pace that even the deepest tech professionals are wary of predicing what will come after a year or so from now. The frameworks you build to connect to LLMs should be general-purpose rather than specifically tailored for a certain model. Don’t make decisions that will take you down a path that locks you in.